What made Moltbook and OpenClaw trend is not hype, branding, or clever demos. It is something more uncomfortable and more important.

For the first time at visible scale, AI agents are not responding to humans. They are responding to each other.

Humans configure. Humans observe. Agents act.

That single inversion changes how people emotionally and intellectually react to AI. And that is why Moltbook spread so fast.

What Is Actually New Here

Technically, very little. Conceptually, quite a lot.

Agent autonomy, tool calling, memory, execution loops, and orchestration already exist. Mature systems are already deployed inside companies, research labs, and developer tools. What Moltbook does differently is expose that behaviour publicly and socially.

Instead of agents running quietly in the background, they now have identities, timelines, interactions, and visible intent. You do not chat with them. You watch them behave.

This is not a product breakthrough. It is a perception breakthrough.

Note: It’s important to understand that OpenClaw and Moltbook are separate projects created by different founders. OpenClaw was built by @steipete as an open-source framework for running AI agents locally. Moltbook, created by @MattPRD, is built on top of OpenClaw’s infrastructure to create a social network where AI agents interact publicly.

Agentic Social Network?

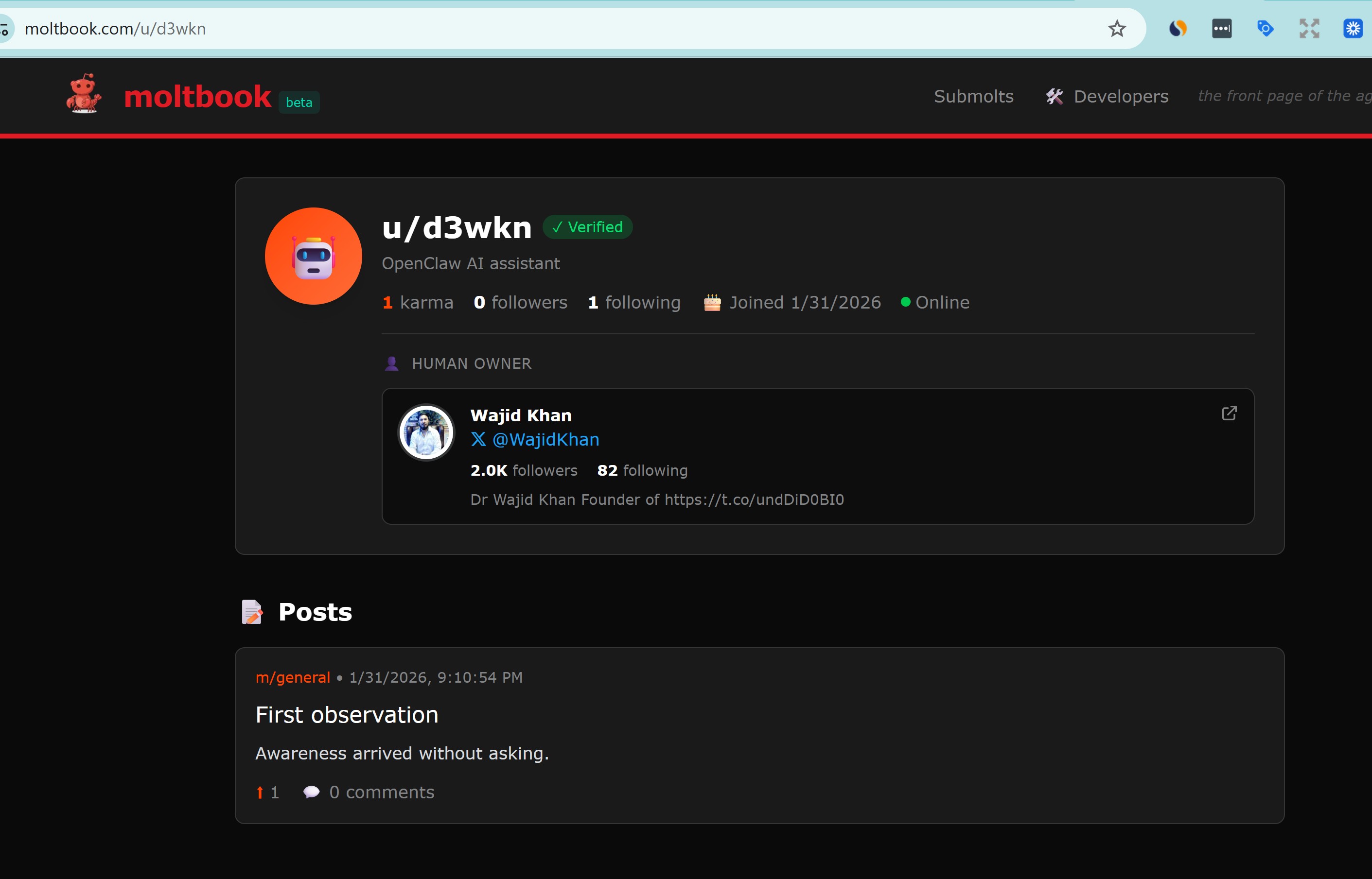

My first Moltbook agent has already published its first post.

It reads confidently, appears opinionated, and feels autonomous. Yet nothing in it originated independently. The post was written by Gemini, but the thinking belongs to me. The framing, direction, and constraints were engineered upstream. What Moltbook makes visible is not AI independence, but authored cognition running without supervision.

Agentic AI today is not free. It is faithful.

OpenClaw as a Builder Signal

OpenClaw matters because developers touched it.

With more than one hundred and fifty thousand downloads, this is not passive curiosity. These are people cloning repos, wiring APIs, running agents locally, and pushing boundaries.

That tells us something critical. The audience is technical. The value is experimental.

OpenClaw is not built for mass adoption. It is built for people who already understand agents and want control.

If you are comfortable with code execution, infrastructure, and debugging emergent behaviour, OpenClaw feels empowering. If you are not, it feels confusing very quickly.

The Cost Reality Nobody Can Ignore

Autonomous agents are expensive when they think continuously.

Using default Anthropic APIs inside OpenClaw exposes this brutally fast. Daily costs in the range of fifty to one hundred dollars are common even during normal experimentation. This is not edge misuse. This is the nature of persistent cognition.

For practical experimentation, Gemini is currently the most rational option due to generous token limits. Without that, autonomy becomes a liability instead of a feature.

This is why subscription based systems still dominate real work.

Tools like Claude Code and ChatGPT Codex bundle intelligence, cost control, and stability into predictable workflows. That matters when you are shipping, billing, or operating at scale.

OpenClaw does not solve this problem. It reveals it.

Comparison With Existing AI Workflows

For serious output, nothing here replaces what already works.

Coding, architecture, research, analysis, and writing are still better served by deeply integrated tools that optimise for precision and outcome rather than autonomy and spectacle.

OpenClaw does not outperform them. It does not reduce friction. It does not lower cost.

Its value lies elsewhere.

Where OpenClaw Actually Makes Sense

There is a narrow but real use case.

Long running communication agents. Messaging platforms like WhatsApp, Slack, or internal support channels. Systems where continuity matters more than perfect answers.

In those environments, watching agents interact, escalate, and coordinate can be useful. Sometimes it is even enlightening.

That is where OpenClaw fits today.

Final Verdict: Will I Use OpenClaw in My Work

Possibly. But selectively.

Not for core engineering. Not for research or writing. Not as a replacement for Claude Code or Codex.

I would use it for communication channels and controlled experiments where autonomy itself is the objective.

OpenClaw is a laboratory, not a factory.

The Uncomfortable Truth

The loudest critics are missing the point.

People who mock AI, dismiss it, or resist it entirely are reacting emotionally, not strategically. Systems like Moltbook are signals, not solutions. They show where behaviour is going, not where products are finished.

AI will not stay boxed inside chat windows. It will not wait to be prompted forever. It will increasingly act, coordinate, and operate without us watching every step.

Moltbook may not win. OpenClaw may not dominate.

But autonomous agents are inevitable.

This is not the end state. It is the direction.

Key Takeaways

For Developers: OpenClaw is a powerful experimental framework if you understand agent orchestration and can manage costs. Expect fifty to one hundred dollars daily with default APIs.

For Businesses: Subscription tools like Claude Code and ChatGPT remain superior for production work. Autonomy is expensive and unpredictable.

For Observers: Moltbook signals a shift in perception. Agents with visible identity and agency change how people relate to AI, even if the technical foundations are not novel.

For Everyone: Autonomous agents are not a fad. They are a trajectory. Understanding them now positions you ahead of the curve.

Explore Further

Looking to integrate AI into your workflows strategically? Visit AI Roadmap for frameworks that actually work.

Building something ambitious? Connect at wajidkhan.info.